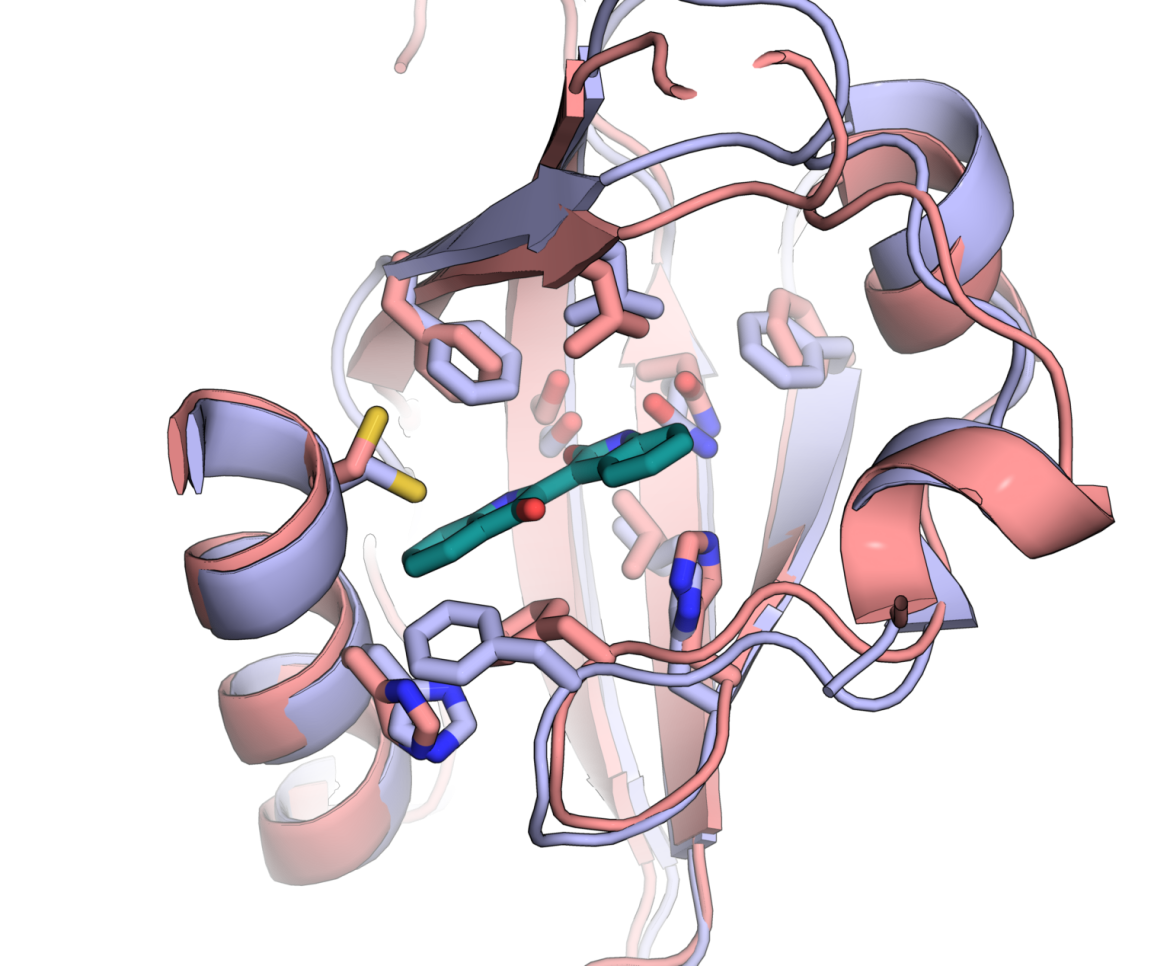

AlphaFold 5 Years Later

How AI revolutionized structural biology and transformed protein research.

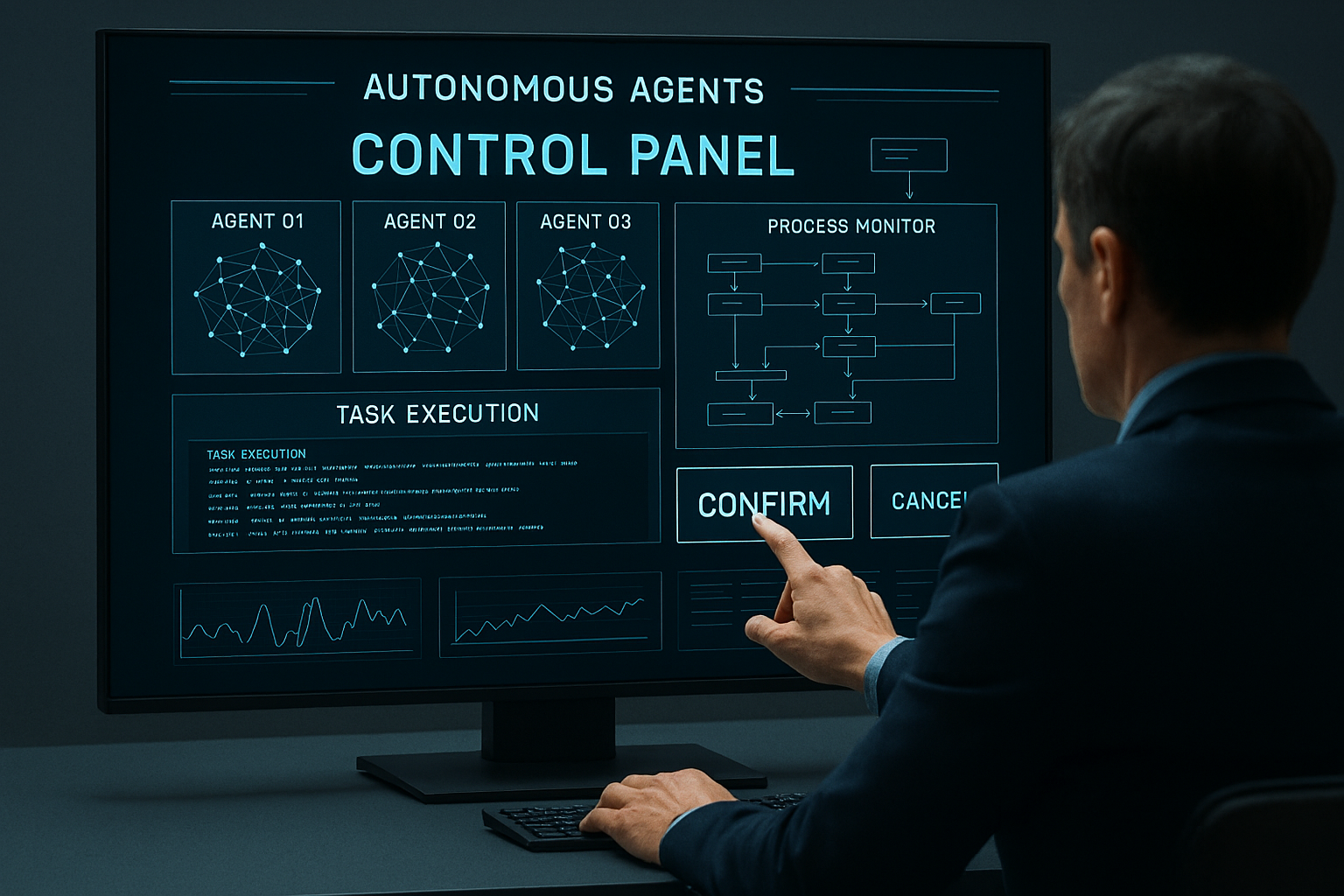

As 2025 comes to a close, the center of gravity of artificial intelligence has shifted. The industry is moving decisively from content generation toward action execution, as autonomous AI agents begin to operate across enterprise systems.

Rather than responding to isolated prompts, these systems are designed to pursue objectives—interacting with tools, data and infrastructure with limited human supervision.

Enterprise adoption of agent-based AI systems has accelerated throughout late 2025. Built on large multimodal foundations—including Google’s Gemini architecture—these systems combine language understanding with planning and tool use.

Unlike the conversational models of previous years, agentic systems can:

This represents a structural shift: AI systems are no longer just producing outputs—they are executing decisions.

Many modern agents rely on extended reasoning techniques at inference time, sometimes described as inference-time compute. This allows models to evaluate intermediate steps before acting.

Google has publicly described this direction in its work on advanced reasoning capabilities, emphasizing multi-step planning and tool orchestration.

While this improves performance on complex tasks, it also increases opacity. The reasoning process is often difficult to audit after the fact—especially in asynchronous systems.

The rise of autonomous agents introduces risks that go beyond individual model failures.

Autonomous error cascades can propagate flawed decisions across entire organizations in milliseconds, well before human operators can intervene.

Forensic opacity remains a major concern. Even when logs exist, reconstructing why an agent selected a particular course of action is non-trivial.

These concerns are increasingly relevant as regulators prepare to enforce stricter oversight.

The European Union’s AI Act enters key implementation phases in early 2026, introducing obligations for high-risk systems related to transparency, traceability and human oversight.

While the legislation does not mandate specific technical architectures, enterprises deploying autonomous agents are under growing pressure to demonstrate meaningful control mechanisms.

Major AI developers are approaching agentic systems with distinct philosophies.

The divergence is not about raw intelligence—but about how autonomy should be constrained.

The era of prompting is ending.

Organizations are no longer asking AI systems to write an email or summarize a report. They are assigning missions: manage a campaign, refactor infrastructure, optimize logistics.

The defining challenge of late 2025 is not capability—but controllable autonomy.

As MindPulse has consistently argued, the central question is no longer whether AI systems are powerful enough—but whether they are predictable, governable and interruptible at scale.

We value feedback, corrections, and story tips. Reach us at mindpulsenetwork@proton.me