Artificial Intelligence in 2025: Breakthroughs, Power, and the Future of Governance

Photo: Yuichiro Chino/Getty Images

A year-end analysis of the scientific advances, systemic risks, and political forces shaping the global AI landscape.

In 2025, artificial intelligence crossed a threshold. What had once been framed primarily as an emerging technology became an infrastructural force shaping science, industry, governance, and society itself. Across disciplines, AI systems moved from experimental tools to foundational components of modern workflows.

This article examines the defining developments of the year — from breakthroughs in scientific discovery to the growing concentration of power and the widening gap in global AI governance. Rather than focusing on a single system or milestone, it offers a structural analysis of where AI now stands and what its trajectory reveals about the years ahead.

From Breakthroughs to Infrastructure

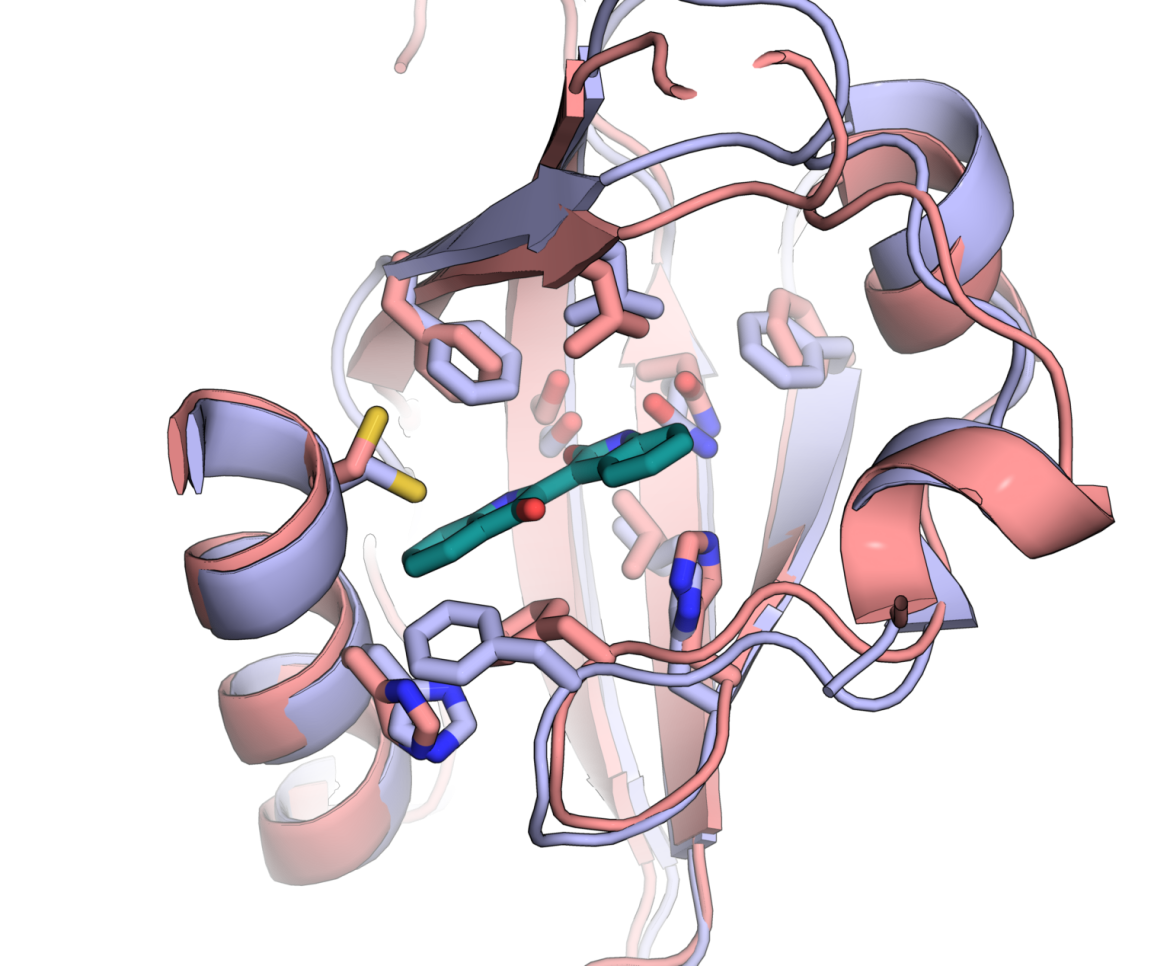

The most visible advances of 2025 occurred in scientific and technical domains. AI-driven systems dramatically accelerated research timelines, enabling discoveries that once required years of experimental effort to unfold in months or even weeks.

In fields such as biology, medicine, and materials science, AI models became routine instruments for hypothesis generation, simulation, and validation. These systems did not replace experimental science, but restructured it — shifting experimentation from exploration to confirmation.

By 2025, public and private investment in AI infrastructure had reached levels comparable to other strategic technologies, reinforcing its role as a foundational layer of scientific and economic activity.

The result was not a single headline breakthrough, but a cumulative transformation: AI increasingly functions as shared cognitive infrastructure for scientific inquiry.

Foundation Models and the Concentration of Capability

Alongside scientific progress, 2025 underscored the growing role of large-scale foundation models. Trained on vast datasets and deployed across countless applications, these systems now underpin everything from research assistance to creative production and software development.

Despite their widespread impact, the capacity to train and operate frontier-scale foundation models remains concentrated within a small number of organizations worldwide, introducing structural asymmetries in power, access, and influence.

As AI becomes embedded in critical decision-making processes, questions about transparency, accountability, and long-term stewardship have moved from theoretical debates to operational necessities.

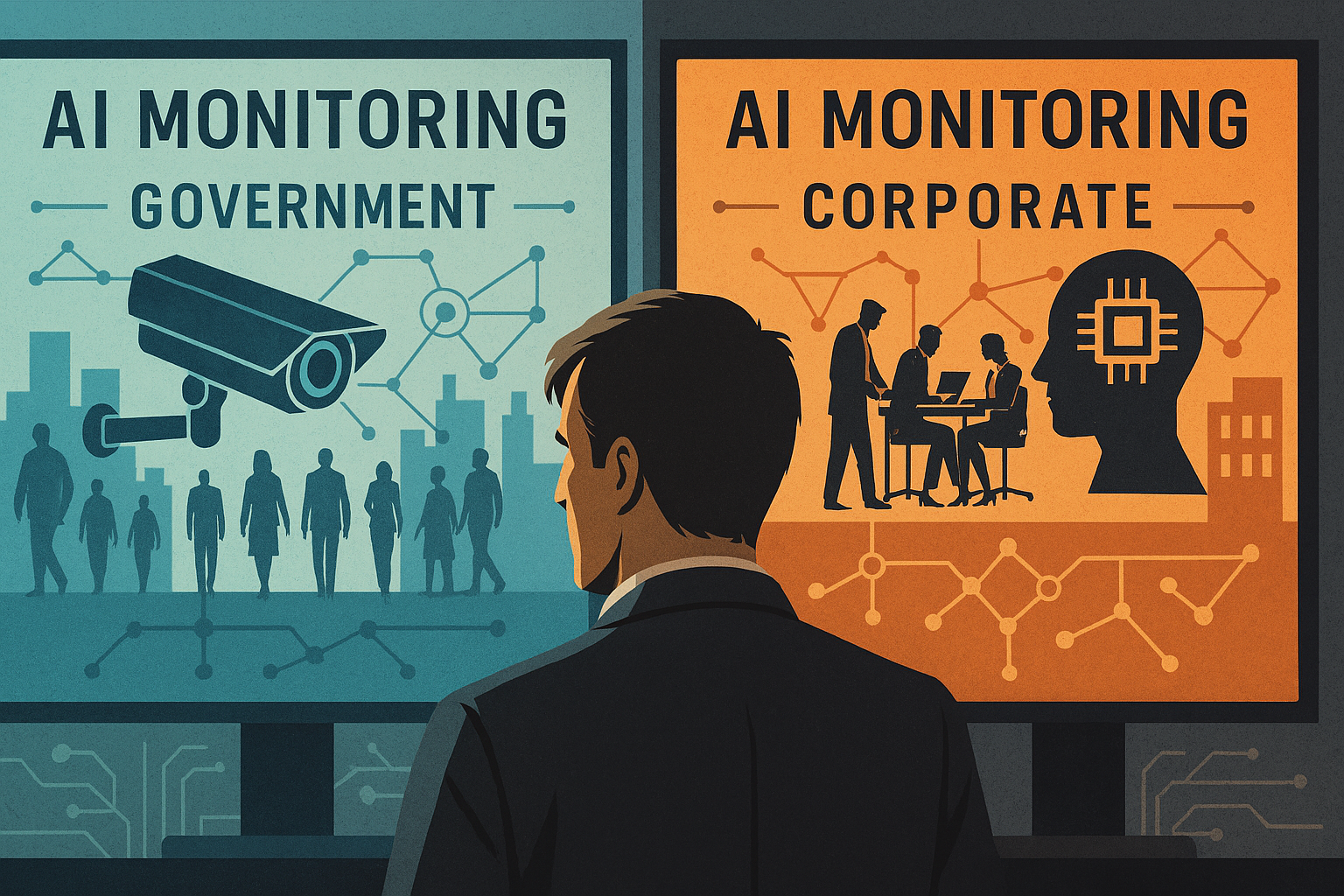

The Governance Gap Comes Into Focus

Perhaps the defining tension of 2025 was not technical, but institutional. While AI capabilities advanced rapidly, governance frameworks struggled to keep pace.

Regulatory approaches diverged sharply across regions. In broad terms, the European Union continued to emphasize civil and regulatory safeguards, while the United States increasingly framed AI through the lens of national security and strategic competition. This divergence has produced a fragmented global landscape with limited coordination.

As highlighted in recent international policy assessments, the pace of AI capability development continues to outstrip existing governance mechanisms, leaving systemic risks unevenly addressed across jurisdictions.

From Acceleration to Alignment

If acceleration defined the early phase of AI development, 2025 marked the beginning of a transition toward alignment. The central challenge is no longer whether AI systems can perform at scale, but whether their deployment can be coherently integrated with human values, institutional constraints, and democratic oversight.

Technical excellence without governance risks producing systems that are powerful yet brittle. Conversely, governance without technical understanding risks becoming symbolic rather than effective. Bridging this divide is now one of the defining tasks of the AI era.

Looking Ahead: 2026

As attention turns toward 2026, the central question will not be whether AI systems continue to improve, but whether governance mechanisms can mature at a comparable pace. The primary risk is no longer technological stagnation, but institutional lag in the face of rapidly consolidating cognitive power.

Conclusion: A Threshold Year

Looking back, 2025 stands as a threshold year for artificial intelligence. It is the moment when AI ceased to be merely an innovation story and became a matter of societal infrastructure.

The direction forward is not predetermined. It will depend on how societies choose to balance capability with accountability, speed with deliberation, and innovation with institutional design.

What is already clear is that the window for shaping AI’s long-term role is open — now. The choices made in the coming years will define not only the technology itself, but the conditions under which knowledge, power, and trust evolve in the digital age.