Europe Moves Forward on AI Governance

Photo: Sébastien Bertrand / Flickr (CC BY 2.0).

Europe is moving decisively to define how artificial intelligence should be governed — not merely as a technological tool, but as a force that must remain compatible with fundamental rights, democratic values and human agency.

As AI capabilities accelerate worldwide, the European Union is advancing a regulatory framework that seeks to protect citizens without freezing innovation, positioning itself as the most ambitious global experiment in AI governance to date.

A Risk-Based Framework for Trustworthy AI

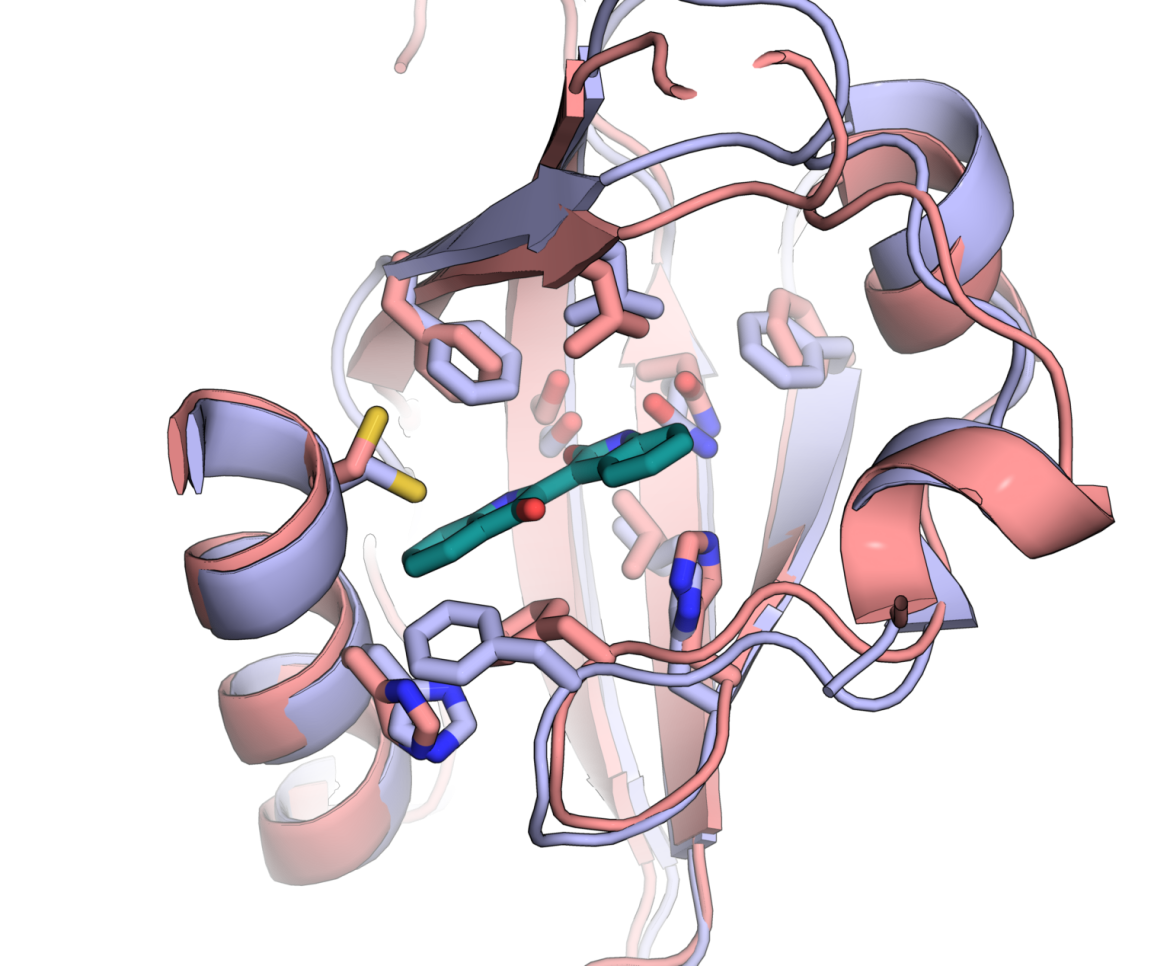

At the core of Europe’s strategy lies a risk-based regulatory model, formalised in the EU Artificial Intelligence Act, the first comprehensive AI law approved by a major global jurisdiction.

According to the official announcement by the Council of the European Union, the legislation aims to foster responsible AI development while safeguarding fundamental rights, safety and democracy.

The framework introduces transparency obligations for generative AI, mandatory risk assessments for high-risk systems, clear accountability rules for deployers, and explicit bans on practices such as indiscriminate biometric mass surveillance.

Regulation Meets Innovation

While European institutions frame the AI Act as an enabler of trust, reactions from the innovation ecosystem remain mixed.

Academic experts have broadly welcomed the approach. In a policy brief cited by Euronews, researchers argue that legal certainty may ultimately benefit innovation by reducing reputational and legal risks for compliant developers.

At the same time, startup associations have warned that compliance costs and regulatory complexity could disproportionately affect smaller teams. The European Startup Network has stressed the need for proportional enforcement to avoid reinforcing market concentration.

Europe as a Global Reference Point

Much like the GDPR reshaped global data protection standards, the AI Act is already influencing regulatory discussions beyond Europe. Governments worldwide are increasingly referencing Brussels’ framework as a potential baseline.

The European Commission explicitly frames the legislation as part of its broader push for trustworthy AI at a global level.

AI Governance as a Societal Project

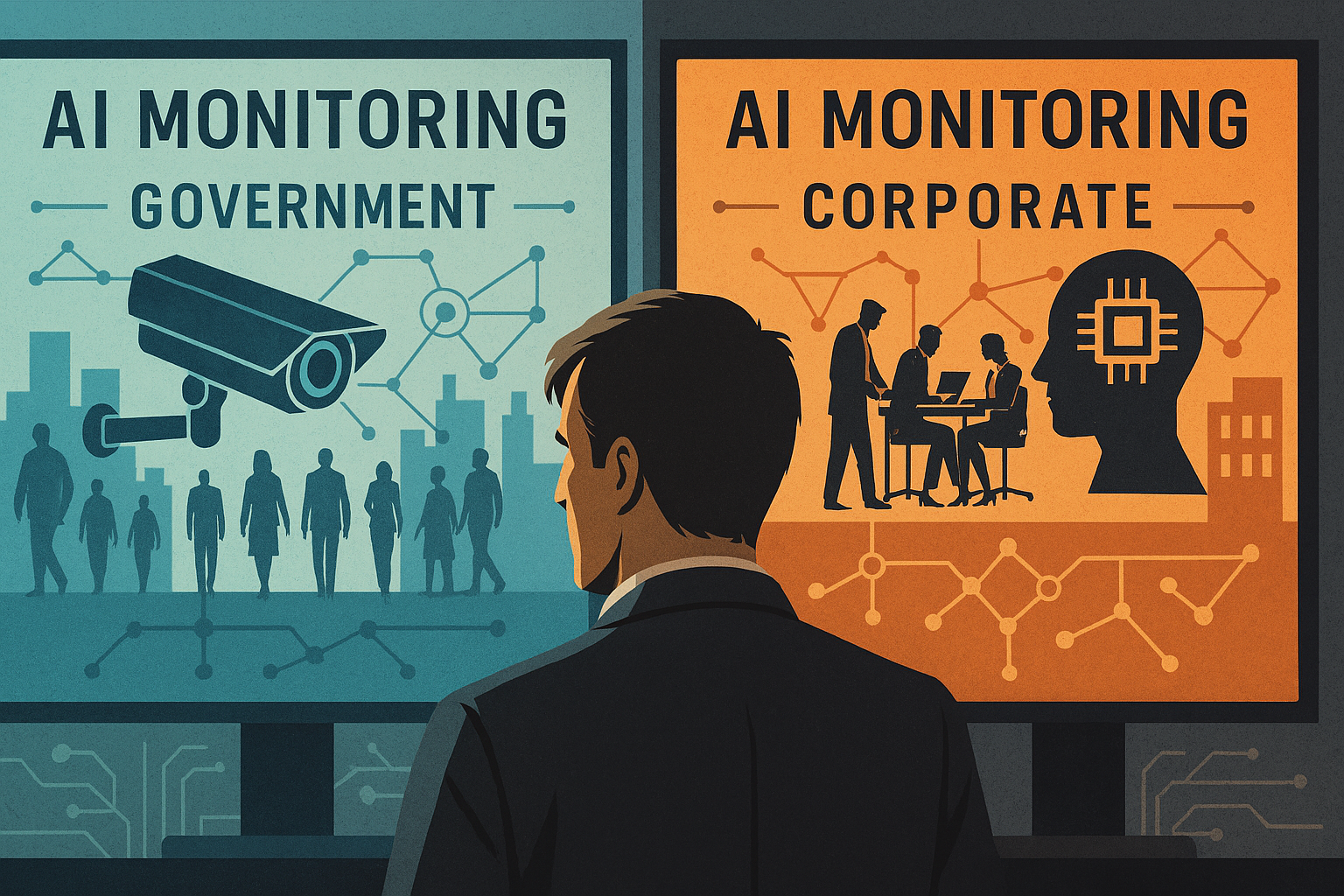

What distinguishes the European approach is its insistence that AI governance is not merely technical, but societal. As automated systems increasingly shape access to information, employment and public services, defining their boundaries becomes a democratic responsibility.

Beyond Institutions

Regulation alone will not determine the future of AI. Independent communities, journalists and civic networks play a crucial role in monitoring implementation, translating complex rules for the public and holding both institutions and corporations accountable.

In this context, initiatives such as MindPulse Network serve as a vital complement to formal governance — ensuring that AI remains aligned with human values long after the legislation is passed.

<