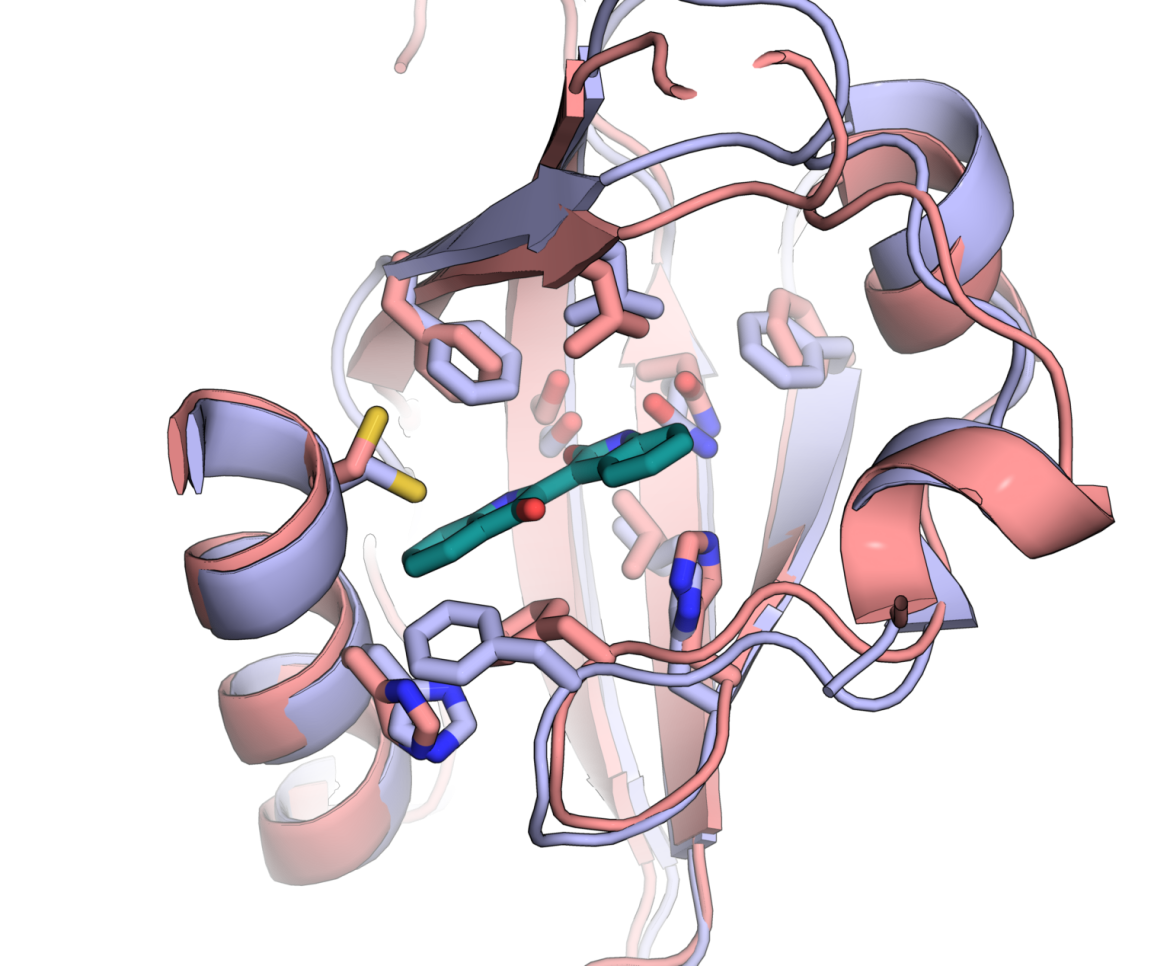

AlphaFold 5 Years Later

How AI revolutionized structural biology and transformed protein research.

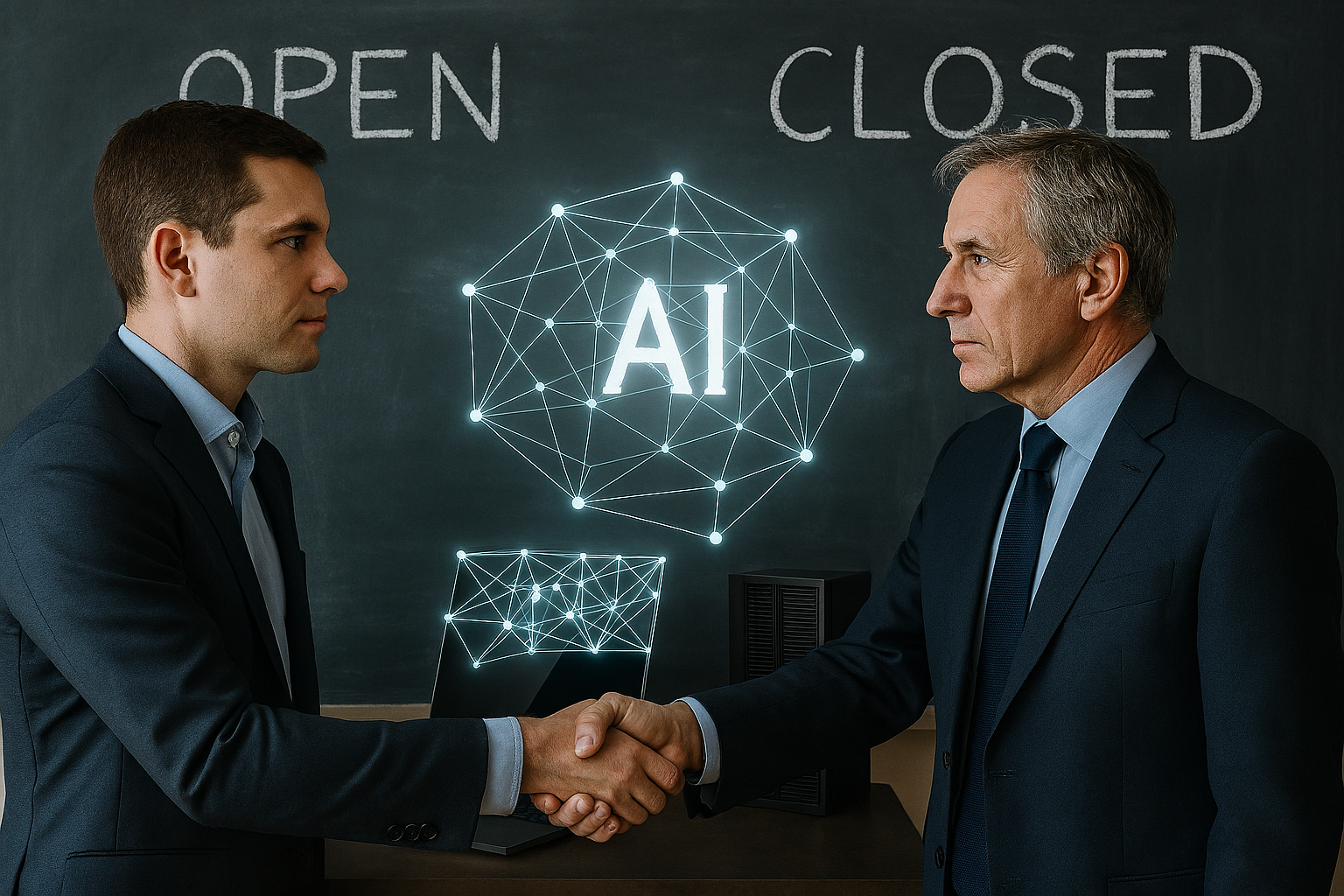

As the performance gap between closed and open AI models collapses, organizations are choosing sovereignty over subscriptions — with profound economic and geopolitical consequences.

Just two years ago, Silicon Valley operated under a “moat” philosophy: scale, proprietary data and compute concentration would guarantee permanent dominance. That moat is now fractured. Intelligence is no longer a scarce resource rented from a handful of labs, but an increasingly distributed strategic asset.

The narrative of proprietary supremacy did not end with regulation or rebellion, but with benchmarks. According to the Stanford HAI AI Index, the performance delta between top closed models and leading open-weight alternatives has narrowed dramatically across enterprise-grade tasks.

This analysis relies on the HELM evaluation framework, which tests models across dozens of representative scenarios including legal reasoning, medical QA and code generation. While parity does not imply absolute equivalence in every specialized domain, it signals convergence where most organizational value is generated.

“We are hitting a plateau in raw LLM scaling for general tasks,” said Meta Chief AI Scientist Yann LeCun. “When marginal gains diminish, transparency and fine-tuning become decisive advantages.”

For executives, the appeal of the API-only economy is fading. An analysis cited by IT Pro shows that enterprises can reduce long-term AI operational costs by up to 80% by deploying open models in-house.

The logic is structural:

This shift transforms AI from an unpredictable operating expense into a controlled strategic asset — a decision increasingly driven by CFOs as much as CTOs.

Critics warn that open weights enable uncontrolled proliferation. Once released, models cannot be globally “patched.” These concerns are legitimate — but incomplete.

A recent peer-reviewed analysis in Computers notes that risks such as model inversion and misuse can be significantly mitigated through deployment-level controls, red-teaming, access restrictions and provenance logging.

The regulatory center of gravity is shifting accordingly. The EU AI Act prioritizes auditing and oversight of high-risk deployments rather than prohibiting model code itself — a pragmatic recognition that control belongs at the point of use.

As we enter 2026, a hybrid equilibrium is emerging. Roughly 5% of use cases — frontier scientific research and extreme reasoning — may remain concentrated within proprietary giants. The remaining 95% of the digital economy will run on open, auditable and locally governed intelligence.

Proprietary power has not disappeared — but it has lost its monopoly on intelligence.

We value feedback, corrections, and story tips. Reach us at mindpulsenetwork@proton.me